The Trolley Problem is a Red Herring

I wrote this in March 2018, but for some reason never published it. Back then, there was an ethics debate based on the trolley problem. If I remember correctly, this was triggered by by MIT’s Moral Machine.

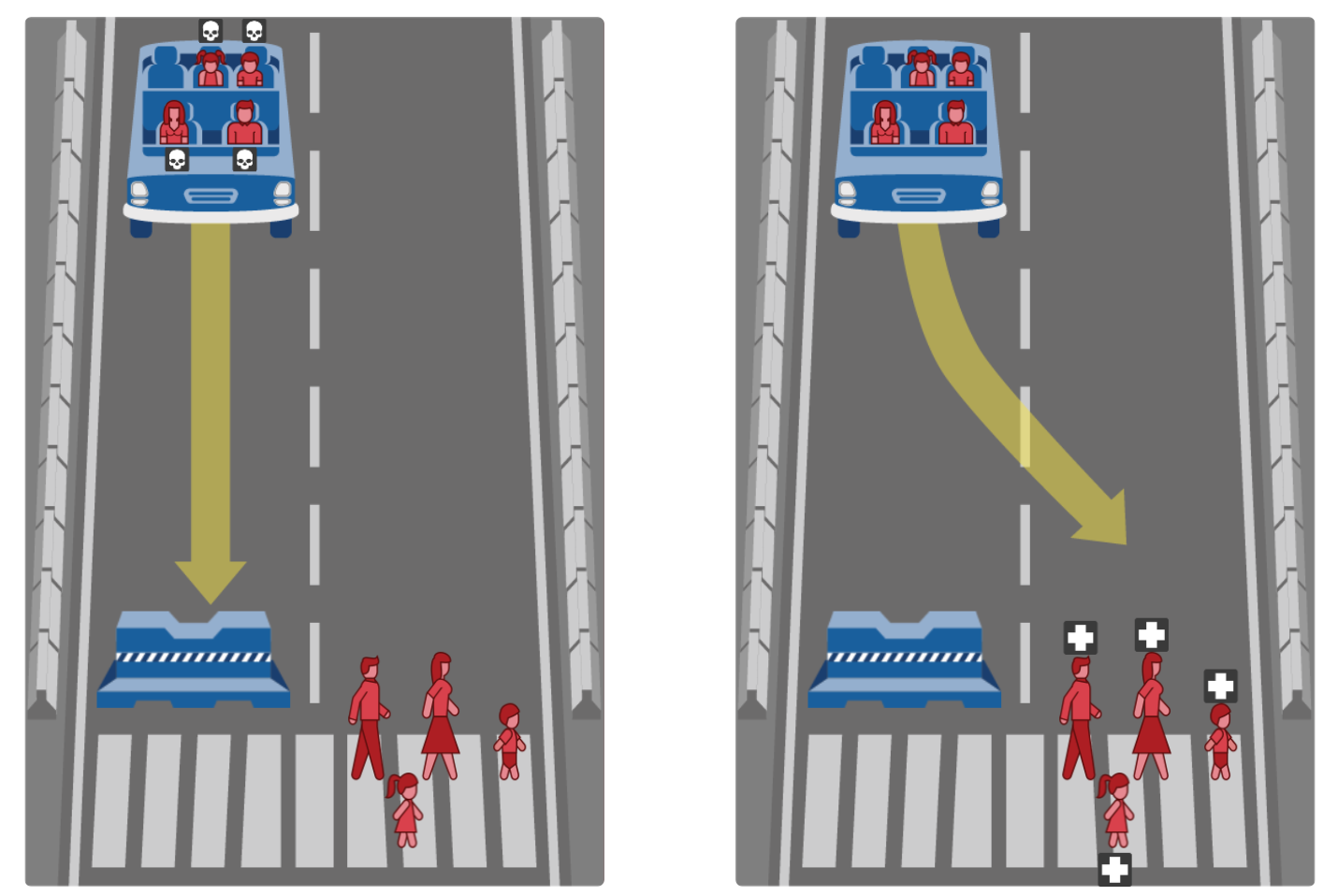

Example scenario from MIT's Moral Machine

For a while, the media loved to discuss the trolley problem for self driving vehicles. Finally these thought experiments for theoretical ethics could be applied to something practical. Should your car kill as few people as possible? Should it consider a social score? Or rather safe the owner?

A human will in most cases not be able to come to a conscious decision because there is not enough time. The almighty computer, on the other hand, can certainly compute a million ethical dilemmas a second.

The debate makes two assumptions: Firstly, that it will have all the relevant information to assess the situation to match it to the equivalent trolley problem variant. Secondly, that a self driving vehicle is likely to get into a trolley problem like situation.

I have my doubts about the first assumption given that a bit of noise can lead ML algorithms to confidently misclassify items. Assuming the vehicle will not confuse a sack of potatoes for a human or vice versa, it will still at best be able to assign probabilities to outcomes. I have not seen any self driving trolley problem discussions based on probabilities, though.

Anyway, let us rather look at assumption two. When I say likely, I mean that we have to expect at least a few of these events per year in total, but not that the individual vehicle is likely to experience the event. Even the small probability of failure of a single item will lead to likely failure at scale. Nonetheless, why would there even be a small probability of a trolley problem situation? In a situation where the density of possible events is high, for example a school zone, is a situation where the vehicle should drive at a speed where it can always stop in time, rather than having to decide between two crash scenarios. On the highway, crash situations can be avoided with extreme likelihood by driving at an appropriate speed. Sorry, if you were hoping your self driving car will be a speed freak, you’ll be disappointed.

What about people jumping in front of a car from behind a pillar while there is oncoming traffic? Ok, sure, situations where the car can be forced into such situations can be constructed. They are just very unlikely to just happen. And even in this case, the answer should probably be for the car to rather drive slowly, if it has to pass a pillar so closely that it cannot see people who could potentially be there.

I am calling the trolley problem a red herring though not because it is impossible for a self driving vehicle to get into this situations (it is), but because they should avoid this situation in the first place. Also, there are far more likely problems. Problems, that should be in the public discourse and that manufacturers should be pressured to address to prevent accidents.

Grounded Boing 737 Max in China by Windmemories, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

The Boeing 737 MAX groundings following two tragic crashes is an example for such problems. Boeing and the FAA favored cost-saving solutions that produced a flawed design. The details are too complex for this blog post but it shows that economical decision making processes can have lethal consequences. I am convinced that more lives can be saved by paying closer attention to this than self driving cars will kill in trolley problem scenarios provided that rigorous safety standards are applied.

Autonomes Säulenblog

Autonomes Säulenblog